For PROCJAM – a jam about procedural generation – I built a proof of concept for a music video generator based on compositional principles.

It leverages a simplified emotion representation, a set of music annotation tools based on such framework and a director/solver that figures out how to represent a specific set of assets and scenes in a spatio-temporal way that is aligned with the music.

It uses a very simple Monte Carlo planning scheme where every frame it generates thousands of alternative shots, evaluates a heuristic for shot quality and chooses the best one.

Unfortunately, I had very little time to build this somewhat ambitious project, and although I haven’t gotten back to it (it’s still a sketch!), I think it has potential considering the recent surge in improved ML models. I believe the annotations can be trained and inferred very easily, and the director can still have hand written heuristics built on pattern matching from these annotations. Alternatively, the director model could also be a fully trained model, but it becomes harder to find and generate data to train such scheme.

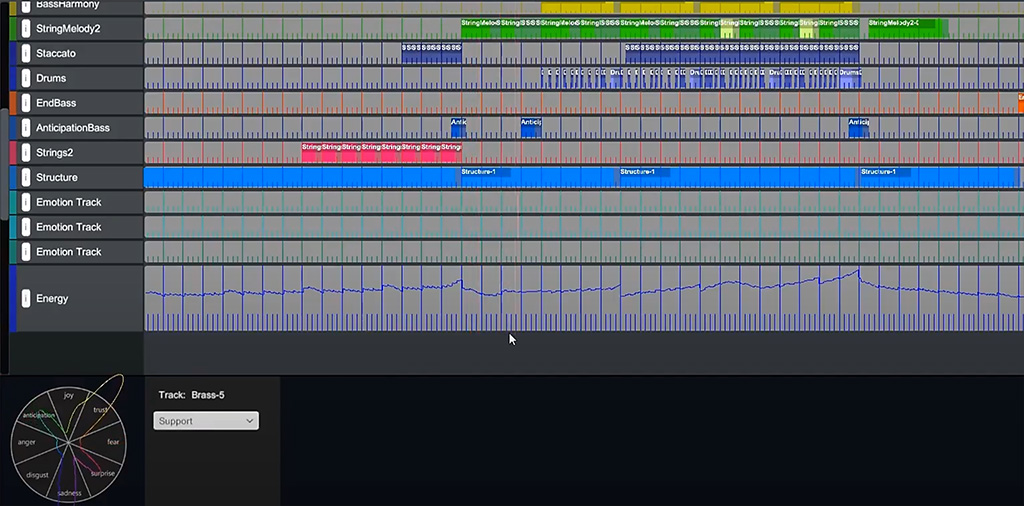

An example track with annotated emotional events, and evaluated energy values

An example track with annotated emotional events, and evaluated energy values

The heuristics used for the director are basically encoded principles of composition: it can vary from a simple rule of thirds to contrast, repetition (shape, position, color) and other associated concepts like Gestalt principles.

You can see more details in the talk, but please note it was a very early proof of concept and it needs a lot more work!